|

|

|||||||||||

|

|

|||||||||||

|

The scientific vacuity of ID: design inference versus "Design Inference"By Pim van MeursPosted November 06, 2006 On Evolution News Casey Luskin makes the following claim:

Luskin is correct to point out that seismologists have made a design inference. What Luskin fails to tell you is that the design inference has little relevance to Intelligent Design's "Design Inference". Let me explain why Luskin's claim shows that Intelligent Design has failed to address some of the many criticisms raised, and that ID's concessions have rendered it to be scientifically vacuous. See also SETI, archeology and other sciences at Skeptico's blog for why Luskin's arguments fail. In the past I and various others have pointed out how ID argues that on the one hand science excludes design inferences and on the other hand that science has successfully applied design inferences in such areas as archeology, criminology etc. The solution for this apparent contradiction is simple: When ID refers to design inference, they are actually talking about the "Design Inference" proposed by Dembski. This "Design Inference" differs in many important ways from how science applies its design inferences. The most important one is that Dembski's "Design Inference" attempts, with limited success, to detect design through elimination while science, with considerable success, eliminates hypothesis by matching positive signatures. The example proposed by Luskin is not different. Notice that the seismologists did not apply the "Design Inference" which would have required various steps to be taken: First of all the signal has to be shown to be ‘specified', secondly, the signal has to be complex which requires that the signal cannot be explained by known regularity or chance occurrences. One could argue that the specification criterion is met by a comparison with previous known nuclear tests but that would be cheating.

Source: William Dembski Intelligent Design as a Theory of Information (sic) So how about complexity?

But this raises a significant problem for ID as it either will have to accept both nuclear explosions as well as natural earthquakes as ‘specified', or neither one will meet the specification requirement. That a design inference is not necessarily the result of intelligent agency, is something that most ID activists simply overlook and yet Dembski was clear on this as was pointed out by Del Ratzsch

Del Ratzsch in "Nature, Design, and Science:The Status of Design in Natural Science", SUNY Press, 2001. More recently Ryan Nichols pointed out that Dembski has made a significant concession

Source: Ryan Nichols, The Vacuity of Intelligent Design Theory

Certainly, such a specification would also render the signal to be simple rather than complex as it can be shown to match a known regularity. Intelligent Design however relies on an absence of known regularities to infer its ‘Design Inference'. So assume seismologists detect a particular event, the conclusion is that whether the event was a natural earthquake or a nuclear explosion, a design inference can be made. In the former case, the designer involves the natural processes in the interior of the earth, in the latter, the natural processes of nuclear fission, set in motion by a nuclear bomb. Luskin's example also shows why the paper by Wilkins and Elsberry titled The advantages of theft over toil: the design inference and arguing from ignorance is still very relevant as the authors show that ‘design' involves two different categories: "… the ordinary kind based on a knowledge of the behavior of designers, and a "rarefied" design, based on an inference from ignorance, both of the possible causes of regularities and of the nature of the designer".

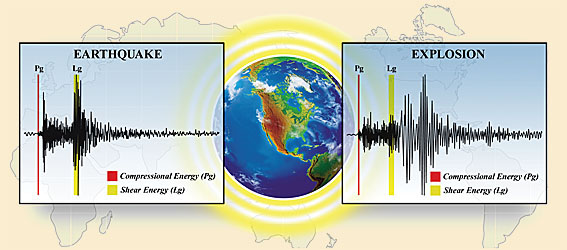

So lets compare how science infers design and compare this with how ID infers ‘Design'. Scientific design inferenceScience: We know from extensive testing and validation that the signature of natural earthquakes differs significantly from nuclear explosions. In fact, the ratio of p to s waves tends to be higher for nuclear explosions. Rather than relying on ignorance, scientists take measurements, build models and in this case things are not much different

Similarly on Seismic Monitoring Techniques Put to a Test scientist Bill Walter explains how science detects ‘foul play'. First of all scientists have access to a large variety of data but for the moment I shall limit the available data to seismology. The first indication of a nuclear explosion is when the actual seismogram differs from typical seismograms found in the region.

Scientists then look at P and S waves. P waves are compression waves

and S waves are transverse or shear waves. Based on scientific

principles, one would expect that explosions will show large P waves

and weak S waves and earthquakes would show just the opposite.

And finally scientists compare the seismogram with earlier seismograms of similar events.

It should be clear by now that seismologists do not conclude ‘we cannot find any regularity or chance explanations, thus designed', but rather rely on comparisons with known events, both natural and ‘designed'. The ID Design InferenceRemind us again what models Intelligent Design presents in support of its thesis? Nada… Niente… Nothing… Niets… Nichts… Final Note: As some have pointed out, and I will quote scientists from the University of Leeds, the low magnitude event (0.5-2kT) suggest one of the following hypotheses 1. North Korea successfully detonated a low-yield device (which is

harder to design than a typical "first-design" weapon which would

deliver in the 5-15 kT range).

and |

Seismograms

show the amplitude of shear energy (Lg) is larger for an earthquake

than for an explosion. Scientists at PNNL are using compressional

(represented by Pg) and shear wave data from seismic events to build

statistical models that will ultimately help distinguish earthquakes

from explosions.

Seismograms

show the amplitude of shear energy (Lg) is larger for an earthquake

than for an explosion. Scientists at PNNL are using compressional

(represented by Pg) and shear wave data from seismic events to build

statistical models that will ultimately help distinguish earthquakes

from explosions.

Seismic signal on vertical components of broadband stations operated by the IPE, BP filter 0.8-2.8 Hz.

Seismic signal on vertical components of broadband stations operated by the IPE, BP filter 0.8-2.8 Hz.